What’s QUIC?

Get ready for the future of the internet with HTTP/3, and the latest and greatest version of the HTTP protocol ever since. With HTTP3 already on the horizon, website owners and internet users alike can now look forward to having a faster, more reliable, and more secure online experiences than ever before. This is all thanks to its foundation on the revolutionary new transport protocol, known as QUIC (Quick UDP Internet Connection).

In May 2021, the IETF standardized QUIC in RFC 9000, supported by RFC 8999, RFC 9001 and RFC 9002.Then on 7 June 2022, IETF officially published QUIC-based HTTP/3 as a Proposed Standard in RFC 9114.

At present, the deployment of QUIC is accelerating all over the world. With the emergence of QUIC IETF-v1 protocol standard, more and more websites begin to use QUIC traffic. According to the statistics of W3Techs, about 25.5% of websites use HTTP/3 for the time being.

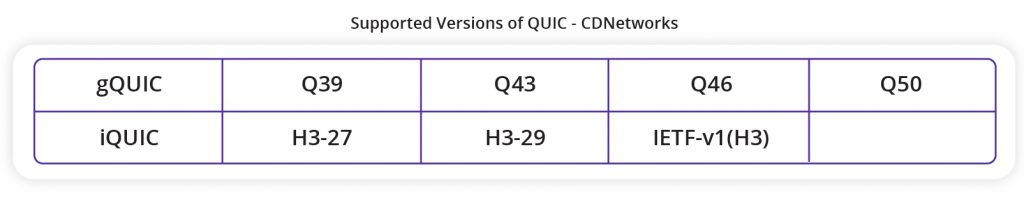

As a pioneer in the network field, CDNetworks has taken the lead in completing the comprehensive support of QUIC protocol. Below table shows the current QUIC version that CDNetworks supports.

How Does It Work?

As the illustration from one aspect, QUIC = HTTP/2 + TLS + UDP, while UDP + QUIC = The transport layer.

QUIC adopts UDP as its transport protocol, which offers lower latency and higher throughput than TCP, and it also enables QUIC to bypass network middleboxes that may interfere with TCP. QUIC includes a built-in encryption protocol based on TLS 1.3, which provides secure communication between the endpoints, and make it harder for third parties to intercept and manipulate the internet traffic. Sprinkling in a dash of commands and version control from protocols like SMB, then mixing in a set of new protocol concepts and efficiencies, that’s where QUIC comes in – combining the speed and efficiency of UDP, the security of TLS, and then the capabilities of HTTP/2, to create a reliable and high-performance transport protocol for the modern internet.

Why is QUIC Important?

Before the release of QUIC, TCP was used as the underlying protocol for transferring data in HTTP. However, as the mobile internet continues to develop, there is an increasing demand for real-time interactions and more diverse network scenarios. Additionally, smartphones and portable devices have become increasingly mainstream, with over 60% of internet traffic currently transmitted wirelessly.

However, the traditional TCP, a transport-layer communication protocol that has been in use for over 40 years, has inherent performance bottlenecks in the current context of large-scale long-distance, poor mobile networks, and frequently network switching, which cannot meet the demands mainly due to the following three reasons:

Large Handshake Delay in Establishing Connections

Whether it is HTTP1.0/1.1 or HTTPS, HTTP2, they all use TCP for transmission. HTTPS and HTTP2 also require the use of the TLS protocol for secure transmission. This leads to two handshake delays:

The delay in establishing a TCP connection caused by the TCP three-way handshake.

The complete TLS handshake requires at least 2 RTTs to establish, and a simplified handshake requires 1 RTT handshake delay.

For many short connection scenarios, such handshake delays have a significant impact and cannot be eliminated.

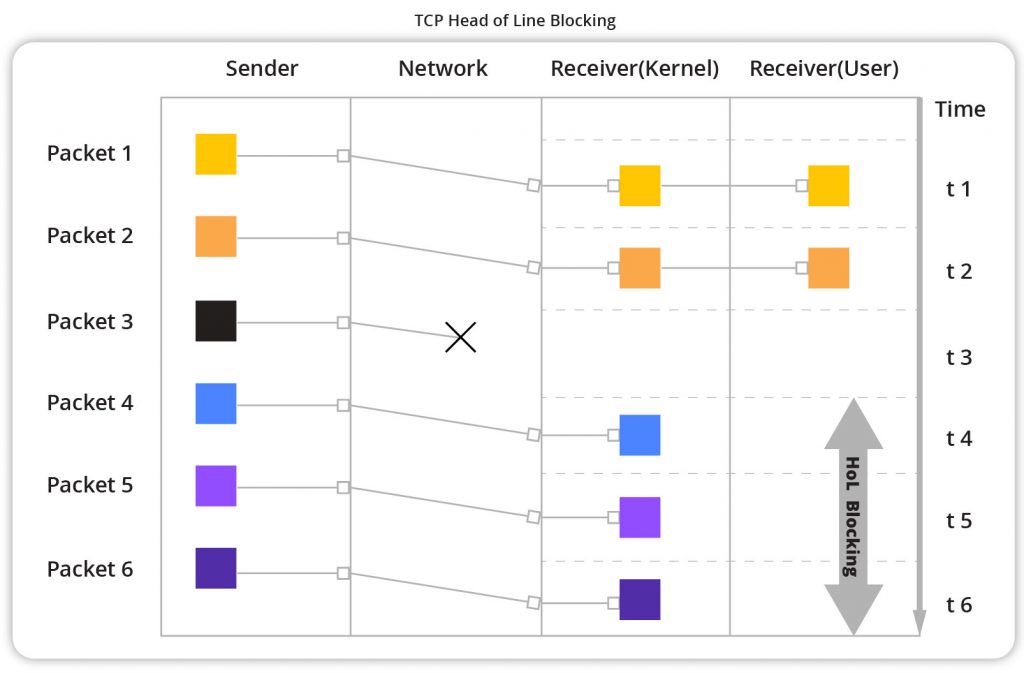

Head-of-line blocking

Taking HTTP/2 multiplexing as an example, multiple data requests are sent as different streams on the same TCP connection, and all streams at the application layer must be processed in sequence. If the data of a stream is lost, the data of other streams behind it will be blocked until the lost data is retransmitted, and the application layer will not be notified even if the receiving end has already received the data packets of the subsequent streams. This problem is called head-of-line blocking (HoL) of TCP streams.

Promoting Updates to The TCP Protocol is not That Easy

TCP protocol was originally designed to support the addition and modification of ports, options, and features. However, due to the long history of TCP protocol and well-known ports and options, many middleboxes (such as firewalls and routers) have become dependent on these implicit rules. As a result, any changes to the protocol may not be properly supported by these middleboxes, which can lead to interoperability issues.

TCP protocol is implemented in the operating system kernels, but it is very difficult to upgrade the user-side operating system version since many old system platforms such as Windows XP still have a large number of users. In addition, TCP users are comfortable with its features and may be resistant to changes that can potentially affect its behavior. So, to quickly promote updates to the TCP protocol is not that easy.

Why We Need QUIC?

The emergence of QUIC has resolved the problem of network transmission in the last mile. Here are the key improvements in QUIC:

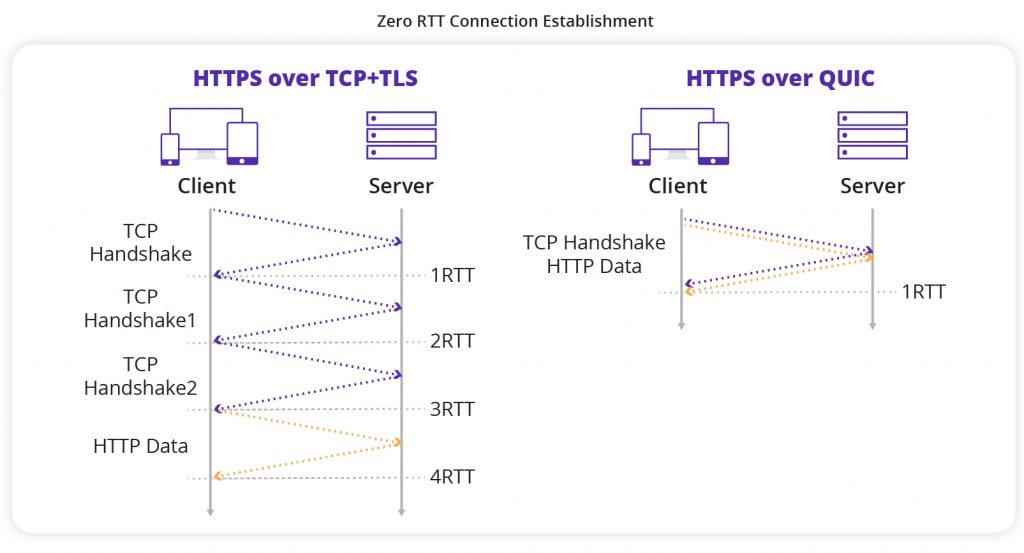

Fast Handshake and Connection Establishment

QUIC has been optimized in two aspects:

- The transport layer uses UDP, reducing the delay of one 1-RTT in TCP three-way handshake.

- The latest version of TLS protocol adoption, TLS 1.3, which allows the client to send application data before the TLS handshake is completed, supporting both 1-RTT and 0-RTT. With QUIC protocol, the first handshake negotiation takes 1-RTT, but a previously connected client can use cached information to restore the TLS connection with only 0-1 RTT.

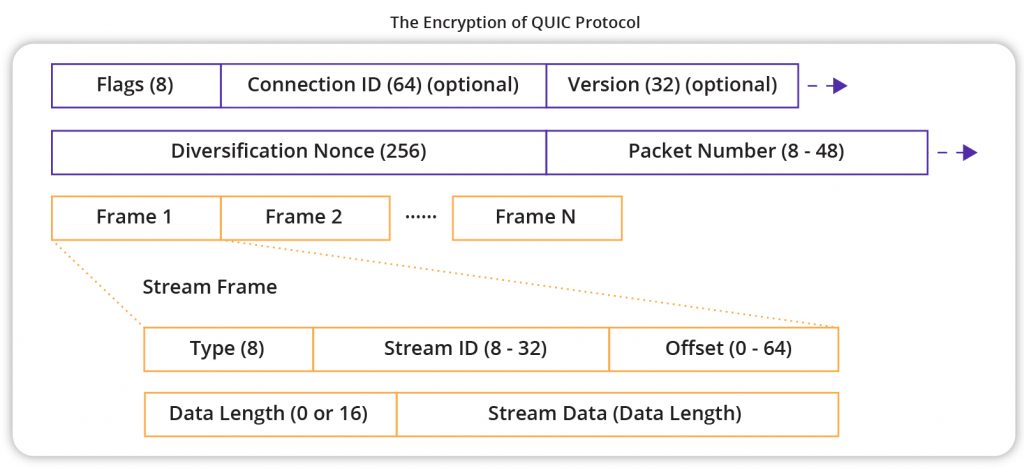

Authenticated and Encrypted Packets

Traditional TCP protocol header is not encrypted or authenticated, making it vulnerable to tampering, injection, and eavesdropping by intermediaries. In contrast, QUIC packets are heavily armed for security. Except for a few messages like PUBLIC_RESET and CHLO, all packet headers are authenticated, and all message bodies are encrypted. This way, any modification of QUIC packets can be detected promptly by the receiving end, effectively reducing security risks.

As shown in the figure below, the content in purple is the authenticated header of the Stream Frame packet, while the yellow part is the encrypted content:

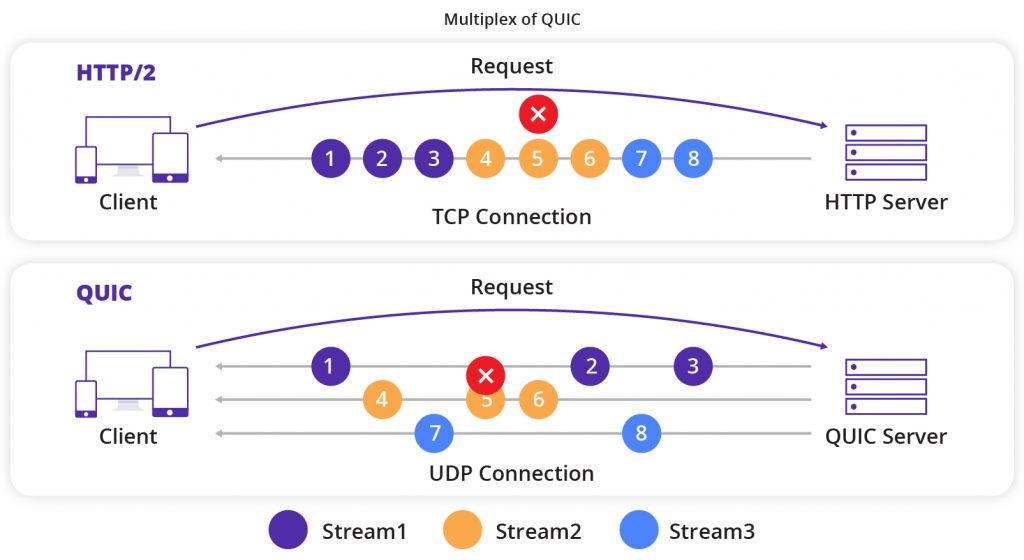

Improving Multiplexing to Avoid HoL Blocking

QUIC introduced the concept of multiplexing multiple streams on the connection. By designing and implementing separate flow control for each stream, QUIC solves the head-of-line blocking problem that affects the entire connection.

The multiplexing of QUIC is similar to HTTP/2. Multiple HTTP requests (streams) can be sent concurrently on a single QUIC connection. However, what makes QUIC’s multiplexing surpasses HTTP/2 is that, there is no sequential dependency between each stream on a single connection. That means if stream 2 loses a UDP packet, it only affects the processing of stream 2, without blocking the data transmission for stream1 and 3. As a result, this solution does not lead to Head-of-Line Blocking.

In addition, a variation of HPACK header compression format called QPACK, as a new feature of QUIC, is designed to reduce the amount of redundant data transmitted over the network, thus to help mitigate Head-of-Line Blocking. In this way, QUIC can receive more data than TCP under week network scenarios.

Pluggable Congestion Control

QUIC supports pluggable congestion control algorithms such as Cubic, BBR, and Reno, or customize private algorithms based on specific scenarios. “pluggable” means that it will flexibly take effect, be changed, and stopped. It is reflected in the following aspects:

- Different congestion control algorithms can be implemented at the application layer without requiring the operating system or kernel support, while traditional TCP congestion control requires end-to-end network protocol stack to achieve control effects.

- Different connections of a single application are allowed to support different congestion control configurations.

- Applications can change congestion control without downtime or upgrades. The only thing we do is to just modify the configuration and reload it on the server-side.

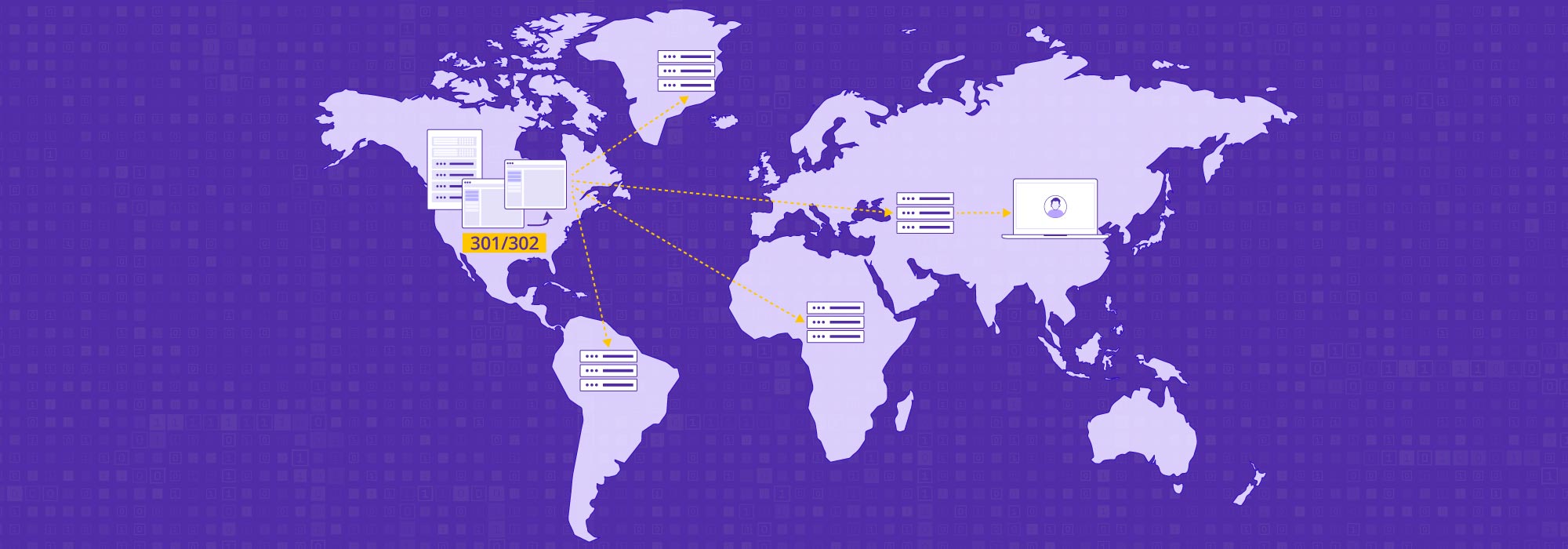

Connection Migration

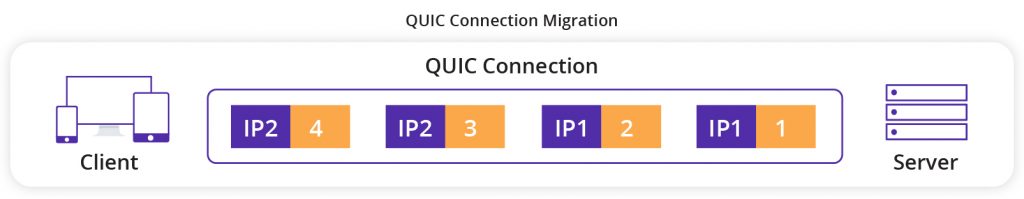

TCP connections are based on a 4-tuple: source IP, source port, destination IP, and destination port. If any of these changes, the connection must be reestablished. However, QUIC connections are based on a 64-bit Connection ID, which allows the connection to be maintained as long as the Connection ID remains the same without disconnection and reconnection.

For example, if a client sends packets 1 and 2 using IP1 and then switches networks, changing to IP2 and sending packets 3 and 4, the server can recognize that all four packets are from the same client based on the Connection ID field in the packet header. The fundamental reason why QUIC can achieve connection migration is that the underlying UDP protocol is connectionless.

Forward Error Correction (FEC)

QUIC also supports Forward Error Correction (FEC), which can reduce the number of retransmissions and improve transmission efficiency in poor network environments by dynamically adding FEC packets.

HTTP/3 and QUIC will Demonstrate Its Value in More Fields

As HTTP/3 and QUIC continue to gain traction and become more widely adopted, no matter it is live & video streaming, video on demand, download, or web acceleration, we can expect to see a wide range of use cases emerge. Some of the most promising application scenarios for these technologies include:

Real-time Applications

HTTP/3 and QUIC are ideal for real-time applications that require low-latency, high-throughput connections. This includes applications such as video conferencing, online gaming, and live streaming. Based on QUIC’s stronger anti-poor network capability and connection migration, video startup time can be effectively improved, also the video stuttering rate and request failure rate can be reduced.

IoT

In IoT scenarios, in which terminal devices are used is frequently complex and chaotic, such as high-speed movement, offshore, and mountainous environments, with very limited network resources available to the devices. The Message Queuing Telemetry Transport (MQTT) IoT communication protocol based on TCP often experiences frequent connection interruptions and large server/client overhead during reconnection. However, the advantages of QUIC’s 0 RTT reconnection/1 RTT establishment capability and multiplexing characteristics are demonstrated in poor and unstable networks, which can improve content transmission efficiency and enhance user experience.

Cloud Computing

With more and more applications and services moving to the cloud, there is a need for more efficient and reliable connections between clients and servers. With the ability to handle multiplexed streams, low latency handshakes, and zero round trip time resumption, QUIC can enhance the performance of cloud-based computing systems.

E-Commerce and Financial Payment

In E-Commerce, the improved reliability and speed of QUIC can help ensure that customers have a seamless and smooth shopping experience even during peak traffic periods. QUIC provide the necessary performance and security features to support E-Commerce applications, such as fast page loading times and secure payment transactions.

As the technologies continue to evolve and mature, we can expect to see even more diverse and innovative use cases emerge, driving the development of new applications and services.

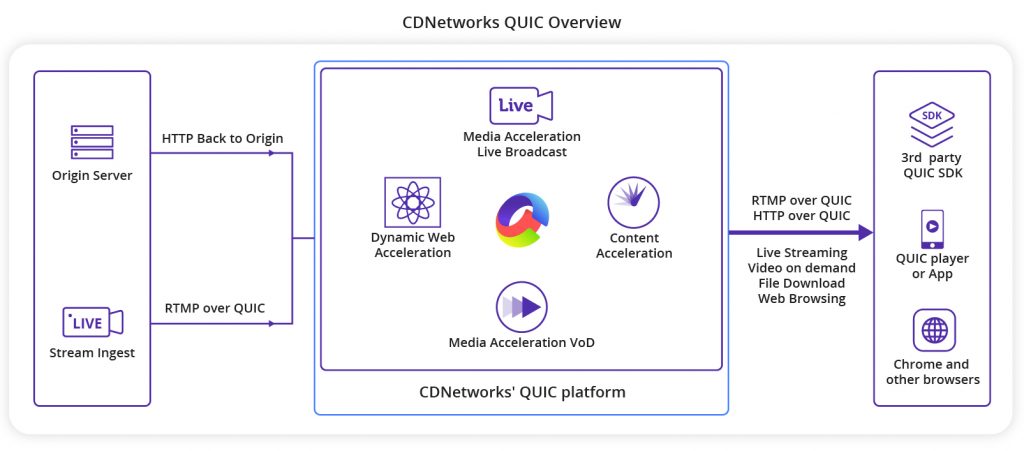

CDNetworks supports HTTP/3 and QUIC in Full Platform

CDNetworks foresaw the feature potential of the QUIC protocol and took the lead in its development. In order to embrace the new era of HTTP/3, CDNetworks implemented support for the QUIC protocol on its platform five years ago and, in response to market demands after the formal release of the HTTP/3 standard, upgraded its entire platform based on the original QUIC to fully support all versions of gQUIC (Google QUIC) and iQUIC (IETF QUIC).

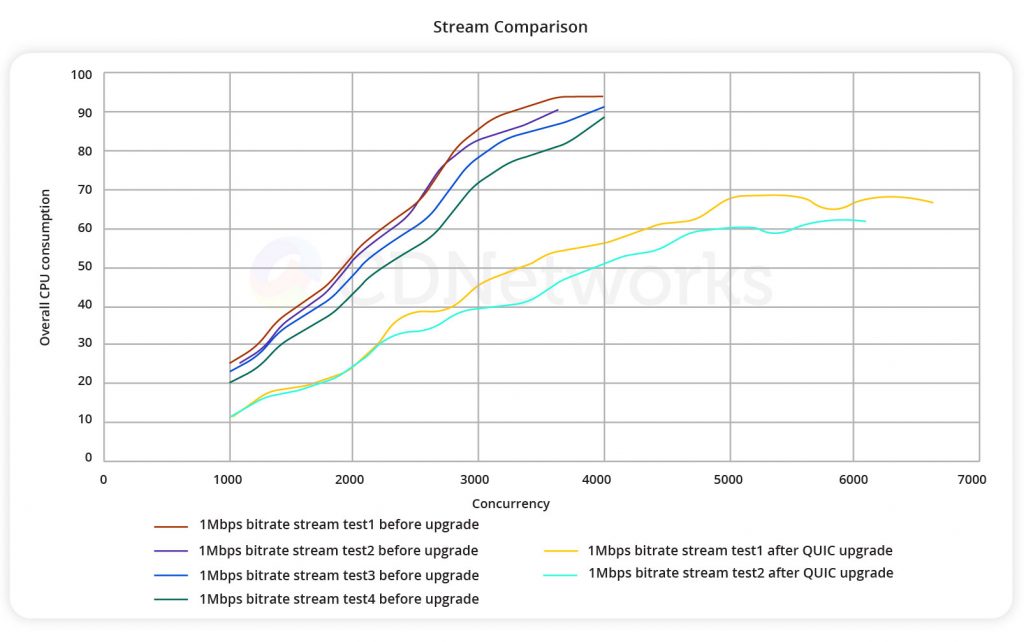

Internally, CDNetworks improved its platform’s frame processing capacity and optimized its performance to reduce machine consumption. According to one part of our internal testing data, during QUIC stream pull scenario with a 1Mbps bitrate, the new platform can improve bandwidth performance by 41% under the same business concurrency conditions, and average CPU consumption is reduced by 28%.

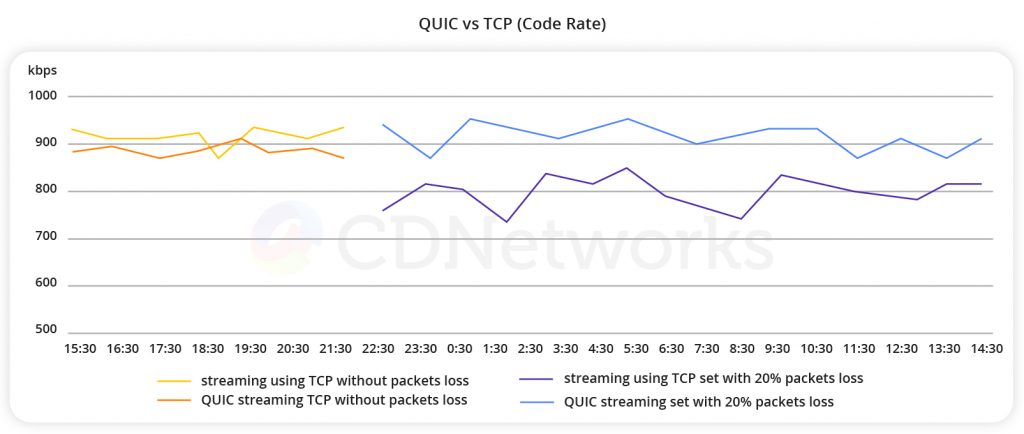

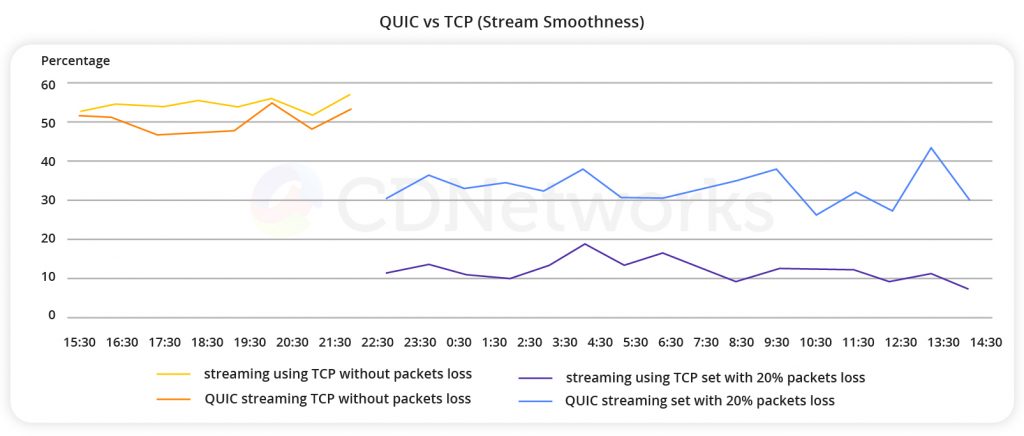

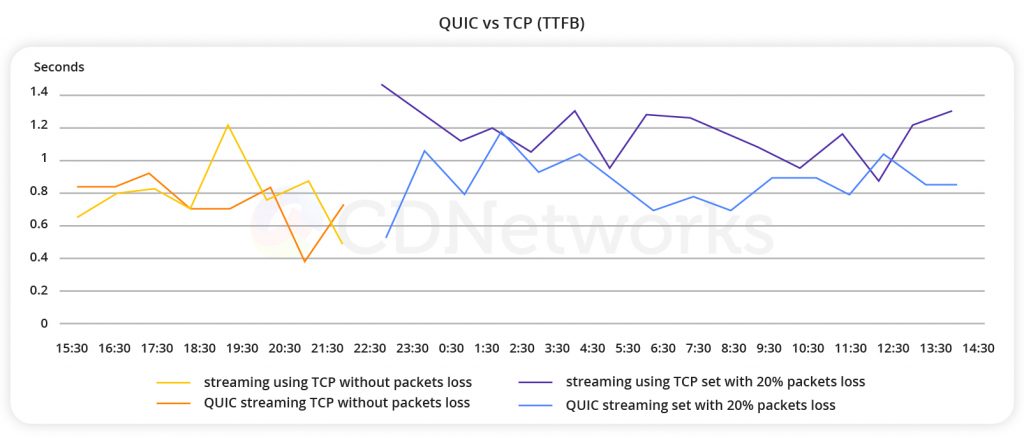

For the live streaming scenario, CDNetworks carried out high-intensity quality optimization to improve the QUIC protocol’s retransmission efficiency and rate sampling calculation ability, optimized UDP packet transmission and GSO(Generic Segmentation Offload)strategies, to effectively solved the problem of unstable video streaming quality in poor network environments across regions. According to one of our test results on live streaming pull scenario comparing video playback using QUIC and TCP protocols, here’s the data you can see:

[Code Rate]

In a non-packet loss environment, the QUIC and TCP have similar code rate, but under 20% packet loss, the QUIC maintains a consistent code rate, while TCP decreases significantly.

[Video Smoothness]

In terms of smoothness, a test sample is counted as stuttering if there is a buffer refill for the player during each hour cycle. In a non-packet loss environment, the QUIC has slightly lower smoothness than TCP due to its additional encryption operation for the application layer packaging. However, under packet loss conditions, the QUIC is significantly better than TCP.

[TTFB]

In terms of the TTFB (Time to First Byte), which is the time interval between the initiation of the playback request by the test machine and the reception of the first video packet, the QUIC maintains a consistent first packet delivery time under 20% packet loss conditions, while TCP adds significant latency.

Related Products about QUIC

To learn more about how CDNetworks can help you with the fastest QUIC streaming, please contact us or click here for a free trial.